Generated by ChatGPT

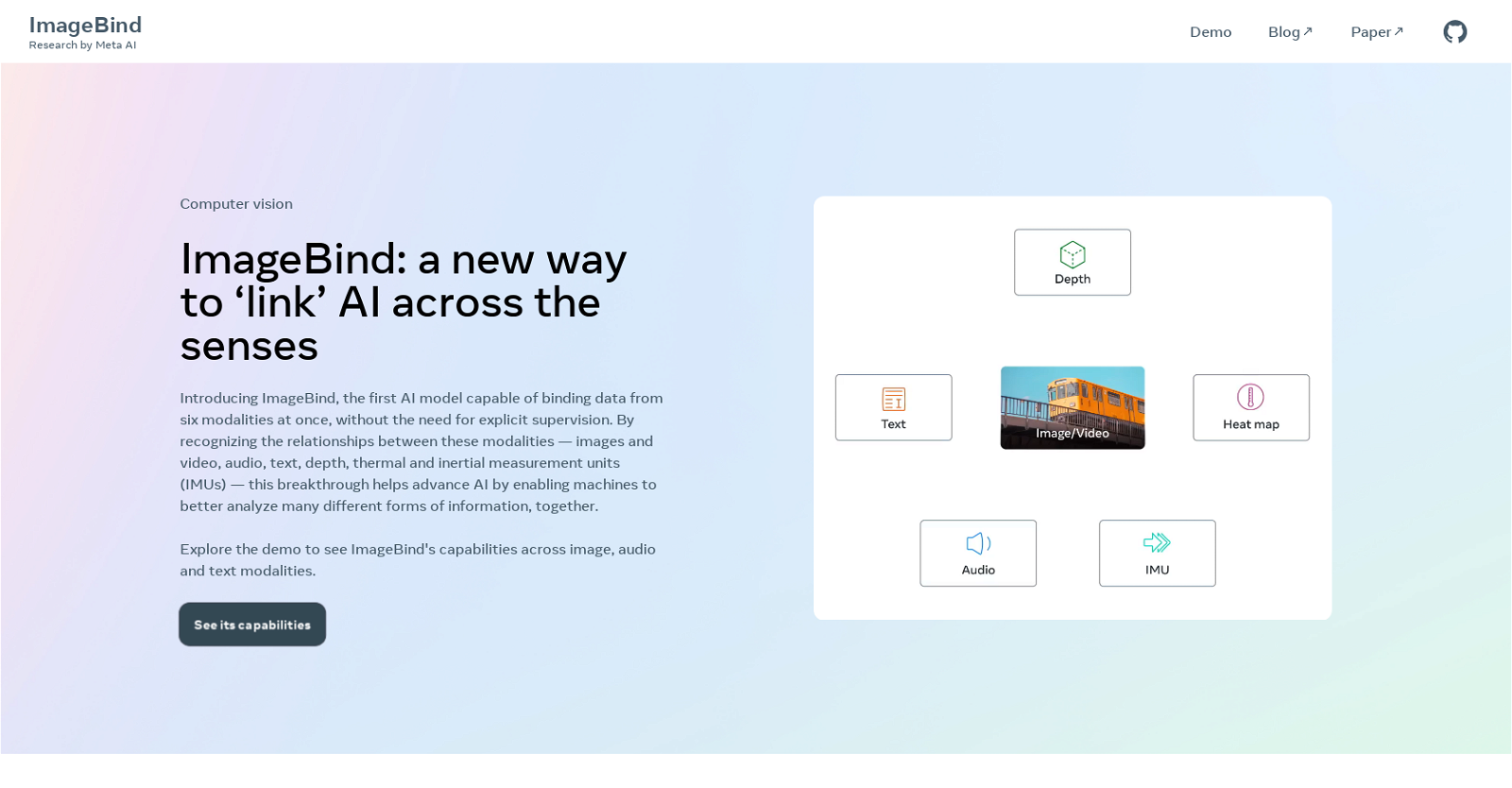

ImageBind is a cutting-edge AI model developed by Meta AI that enables the binding of data from six modalities at once, including images and video, audio, text, depth, thermal, and inertial measurement units (IMUs). By recognizing the relationships between these modalities, ImageBind enables machines to better analyze many different forms of information collaboratively. This breakthrough model is the first of its kind to achieve this feat without explicit supervision. By learning a single embedding space that binds multiple sensory inputs together, it enhances the capability of existing AI models to support input from any of the six modalities, allowing audio-based search, cross-modal search, multimodal arithmetic, and cross-modal generation. ImageBind is capable of upgrading existing AI models to handle multiple sensory inputs, which helps enhance their recognition performance in zero-shot and few-shot recognition tasks across modalities, something it does better than the prior specialist models explicitly trained for those modalities. The ImageBind team has made the model open source under the MIT license, which means developers around the world can use and integrate it into their applications as long as they comply with the license. Overall, ImageBind has the potential to significantly advance machine learning capabilities by enabling collaborative analysis of different forms of information.