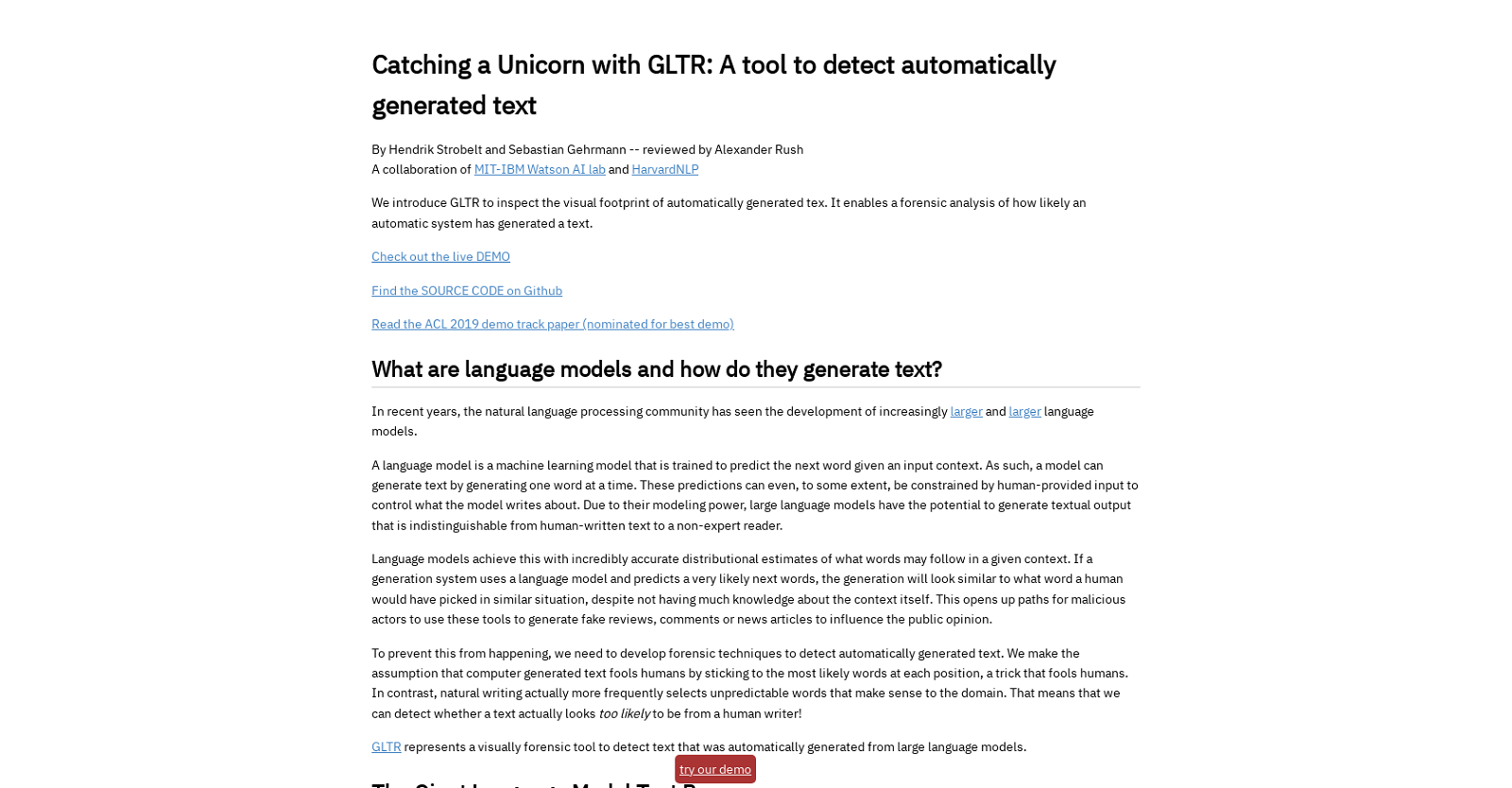

GLTR is a tool developed by the MIT-IBM Watson AI lab and HarvardNLP that can detect automatically generated text using forensic analysis. It detects when a text has been artificially generated by analyzing how likely it is that a language model has generated the text.. GLTR visually analyzes the output of the GPT-2 117M language model from OpenAI, which allows it to rank each word according to how likely it is to have been produced by the model.. The tool then highlights the most likely words in green, followed by yellow and red, and the rest of the words in purple. GLTR provides a direct visual indication of how likely each word was under the model, making it easy to identify computer-generated text.. GLTR also shows three histograms which aggregate information over the whole text. The first histogram shows how many words of each category appear in the text, the second illustrates the ratio between the probabilities of the top predicted word and the following word, and the third shows the distribution over the entropies of the predictions.. By analyzing these histograms, GLTR provides additional evidence of whether a text has been artificially generated.GLTR can be used to detect fake reviews, comments, or news articles generated by large language models, which have the potential to produce texts that are indistinguishable from human-written text to a non-expert reader.. GLTR can be accessed through a live demo and the source code is available on Github. Researchers can also read the ACL 2019 demo track paper, which was nominated for best demo..

Detecting misinformation and deepfakes.

Analyzing sentences for written integrity verification.

Enhancing content authenticity via rewriting.

Checked content's originality & plagiarism.

Detecting copied or generated content.

Content proofing.

Improving writing and ensuring academic integrity.

Recognizes synthetic text.

Text plagiarism & source detect.

Detect machine vs human text for content management.

Detects fake text with high accuracy.

Reliable reviews by validated text.

Discover WriteHuman's AI Detector: distinguishing between AI-generated and human-written text.

Authenticate and detect plagiarism in content.

Detect plagiarism in teachers' essays for originality.

Write with confidence and bypass AI detection with BypassDetection.

Evaluate & monitor generative models

Undetect humanizes & rewrites content.

Identify and flag text for content verification.

Validated text content generation.

Detect AI generated text in a click

Authenticity detection for human & machine text.

Differentiates human vs. synthetic text.

Simplifying the detection of AI-generated text.

Detecting plagiarism and verifying content.

Badge indicating human-generated content.

Innovative, fast and easy-to-use plagiarism checker.

Detects ChatGPT- generated content plagiarism.

Detects generated text across domains.

Verification of content authenticity.

Content verification and plagiarism detection solution.

Classifies human vs machine text.

Plagiarism detection for educational institutions.

Enhanced content creation and analysis for publishers.

Text sorts human and machine content.

Discover the power of AI detection on Twitter.

Analyzes doctored media.

Differentiating human from generated text.

Kodora is Australia’s leading AI consulting and technology firm. We deliver AI strategy, automation, security, and workforce training to help businesses scale with confidence. Kodora is proudly sovereign, trusted by enterprise and government to manage end-to-end AI capability.

"*" indicates required fields

Copyright © 2023-2025 KODORA PTY LTD. All rights reserved. Privacy Policy

Please complete the form to download the whitepaper.

"*" indicates required fields

Please register to access.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields

Please complete the form to download the whitepaper.

"*" indicates required fields