Generated by ChatGPT

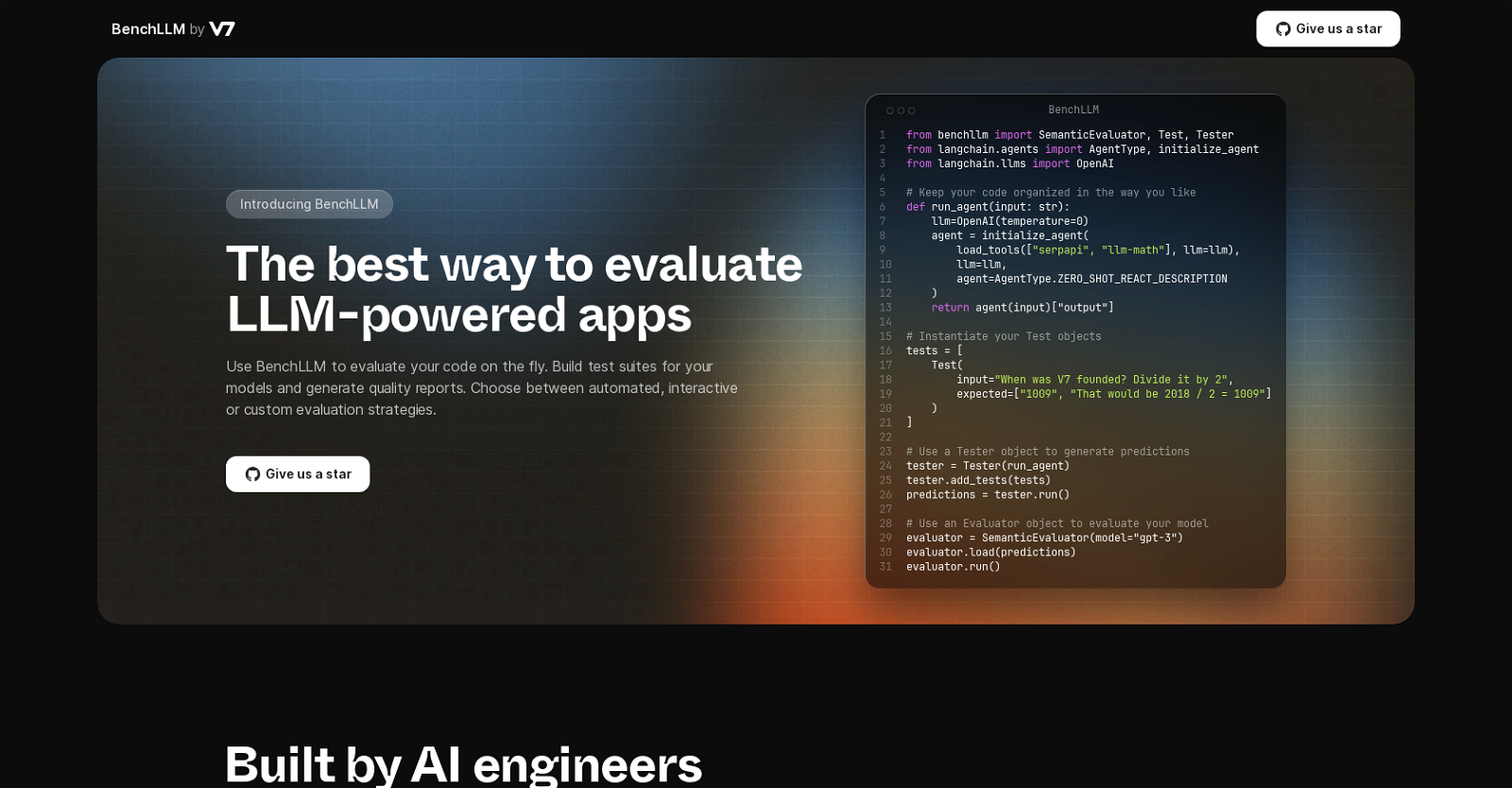

BenchLLM is an evaluation tool designed for AI engineers. It allows users to evaluate their machine learning models (LLMs) in real-time. The tool provides the functionality to build test suites for models and generate quality reports. Users can choose between automated, interactive, or custom evaluation strategies.To use BenchLLM, engineers can organize their code in a way that suits their preferences. The tool supports the integration of different AI tools such as “serpapi” and “llm-math”. Additionally, the tool offers an “OpenAI” functionality with adjustable temperature parameters.The evaluation process involves creating Test objects and adding them to a Tester object. These tests define specific inputs and expected outputs for the LLM. The Tester object generates predictions based on the provided input, and these predictions are then loaded into an Evaluator object.The Evaluator object utilizes the SemanticEvaluator model “gpt-3” to evaluate the LLM. By running the Evaluator, users can assess the performance and accuracy of their model.The creators of BenchLLM are a team of AI engineers who built the tool to address the need for an open and flexible LLM evaluation tool. They prioritize the power and flexibility of AI while striving for predictable and reliable results. BenchLLM aims to be the benchmark tool that AI engineers have always wished for.Overall, BenchLLM offers AI engineers a convenient and customizable solution for evaluating their LLM-powered applications, enabling them to build test suites, generate quality reports, and assess the performance of their models.