Australian leaders are embracing AI to accelerate growth, streamline operations and improve customer experience, yet many encounter the same uncomfortable question: how do artificial intelligence hallucinations occur in systems that otherwise perform so impressively?

Hallucinations are convincing but incorrect outputs that arise when a model generates content untethered from facts or the available context. In domains like finance, healthcare, legal services and public sector service delivery, even a small rate of confident error can create material regulatory, reputational and operational risk.

Benchmarks consistently show that large language models can produce factual mistakes on open-ended tasks, with error rates varying widely by domain, prompt and configuration. Independent evaluations report double‑digit error rates in challenging knowledge queries, while task‑specific tuning and grounding can reduce these significantly.

In 2023 a US court sanctioned lawyers for filing AI‑fabricated citations, a cautionary tale for any enterprise deploying generative systems without guardrails. The Australian Government’s 2024 interim response on Safe and Responsible AI underscores the need for testing, transparency and accountability—principles that map directly to controlling hallucinations in production.

How do artificial intelligence hallucinations occur? The mechanics behind confident errors

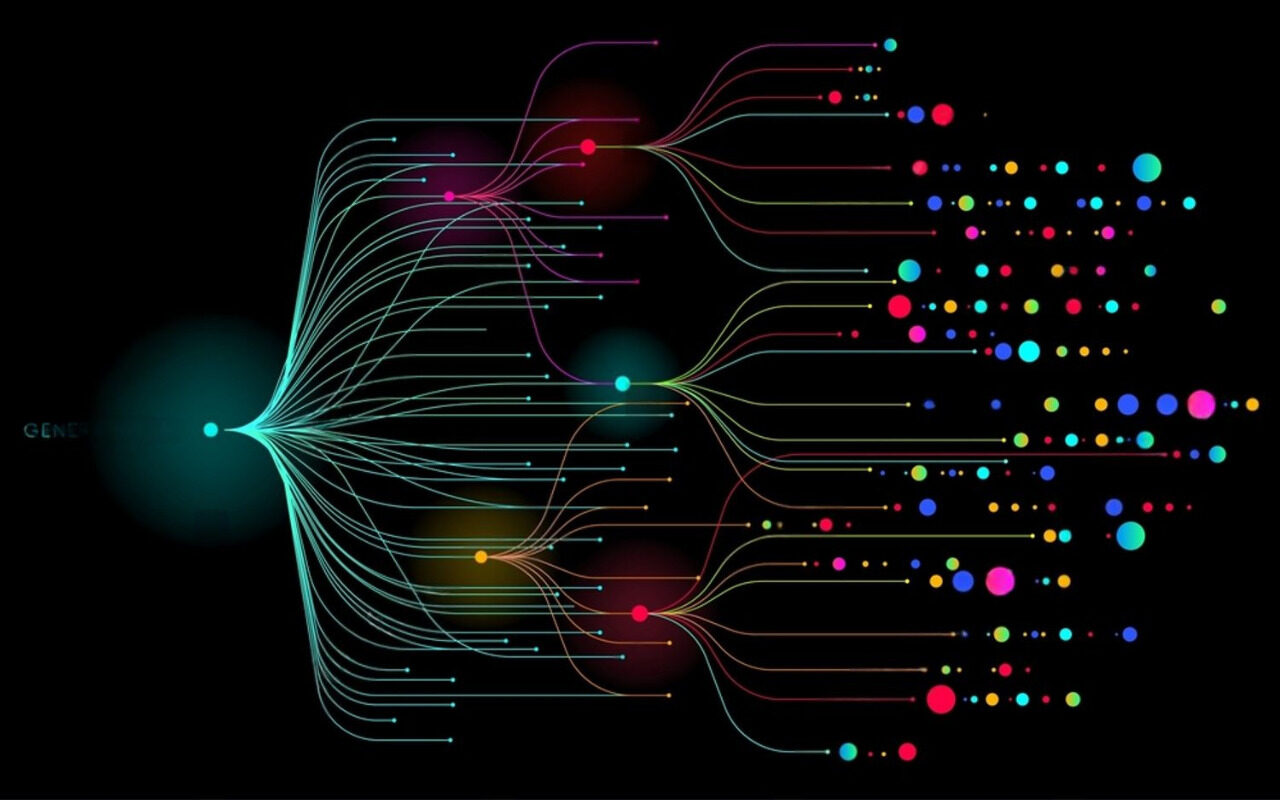

At the model level, modern systems generate text by predicting the next token based on patterns learned from vast corpora. This probabilistic process does not “know” facts; it estimates what is plausible. When the training distribution differs from your domain, the model can produce fluent, on‑topic but incorrect statements, a phenomenon known as distribution shift. This is amplified by exposure bias, where models are trained to continue gold-standard text but in deployment must continue their own imperfect generations, allowing small deviations to compound into larger errors over longer outputs.

Data and knowledge gaps contribute directly. If the underlying training data is sparse, outdated or contradicts your authoritative sources, the model fills gaps with the most statistically likely completion rather than a verified truth. Knowledge cutoff dates exacerbate this; without retrieval or tool use, the model cannot access updates, policies or prices that changed after training. This is why a model can confidently cite an outdated regulation or an obsolete medical guideline unless grounded in current, curated data.

Decoding choices matter. Higher temperatures and broader sampling (for example, top‑p values near 1.0) increase diversity but also the chance of stepping off the factual path. Lower temperatures reduce variability but do not eliminate hallucinations. Length constraints can force incomplete reasoning, while unconstrained lengths can drift into speculation. Even deterministic decoding can produce errors if the most probable continuation is wrong for your context.

Prompting and instruction design strongly influence outcomes. Ambiguous prompts, compound instructions or requests to “assume” missing facts invite the model to fabricate connecting details. Long contexts can also degrade reliability: models exhibit “lost in the middle” effects where mid‑context information is overlooked, and relevance decays as context windows grow unless retrieval is carefully engineered and reranked.

System integration introduces additional failure modes. Retrieval‑augmented generation (RAG) reduces hallucinations by grounding answers in your knowledge base, but poor document chunking, stale indices, weak passage ranking or retrieval timeouts can return irrelevant sources that the model then elaborates upon confidently. Tool usage failures—API timeouts, calculator errors, or unavailable databases—can nudge the model to guess rather than defer. Finally, reinforcement learning from human feedback can unintentionally reward confident tone over factual rigor if evaluators prioritize style, incentivising “reward hacking” where the model optimises for the appearance of helpfulness.

Business impact in the Australian context

For Australian enterprises operating in regulated sectors, hallucinations can create consumer law exposure, mislead customers about fees or product features, and undermine duty of care. Financial services must consider ASIC guidance on design and distribution obligations and monitor for misleading statements in digital advice or customer support. Public sector agencies face trust and legitimacy risks if generative systems provide incorrect information at scale. Even in lower‑risk creative applications, rework, manual verification and delayed time‑to‑market erode the expected productivity uplift from AI initiatives.

Reducing hallucinations with layered controls

Effective mitigation combines technical grounding with operational governance. Grounding with RAG ties outputs to a curated corpus, with strict citation requirements and answer construction that only uses retrieved passages. High‑quality indexing, semantic retrieval, cross‑encoder reranking and freshness policies ensure the right evidence is surfaced at the right time. Constraining generation with schemas and function calling forces structured outputs and reduces free‑form speculation, while setting conservative decoding parameters for high‑stakes tasks lowers variance.

Calibration and verification are essential. Self‑consistency techniques and multi‑pass reasoning can reduce single‑shot errors, and automated verifiers can cross‑check facts against trusted APIs or knowledge graphs before content is released. Where risk is material, human‑in‑the‑loop review remains the gold standard, with workflows that escalate uncertain responses based on confidence scores or retrieval coverage. Continuous evaluation using domain‑specific factuality and faithfulness tests, alongside red‑teaming for prompt injection and jailbreaking, provides the metrics required to manage model risk proactively.

Clear communication builds trust. Prominently disclosing model limitations and knowledge cutoffs, highlighting cited sources, and signalling uncertainty when evidence is thin help users interpret outputs appropriately. In Australia’s evolving regulatory environment, maintaining audit logs of prompts, retrieved evidence and model versions supports accountability and incident response.

What good looks like: an operating model for reliability

Leaders who succeed treat hallucination control as a continuous capability, not a one‑off fix. They define high‑risk use cases and acceptance criteria up front, select models based on observed base‑rate hallucinations in their domain, and pair them with robust retrieval against a governed content lake. They deploy guardrails that validate inputs and outputs, enforce citations, and block unsafe behaviors. They instrument every application with analytics that track factuality, correction rates, user overrides and downstream incidents, then feed those insights into prompt refinement, retrieval tuning and data curation. Above all, they align accountability across product, risk, legal and engineering so trade‑offs are explicit and well‑governed.

Getting started with Kodora

Kodora helps Australian organisations diagnose why hallucinations occur in their specific workflows, quantify the base rate, and implement layered controls that combine retrieval, constrained generation, verification services and human‑in‑the‑loop design. We build the engineering and governance foundations—evaluation harnesses, content pipelines, monitoring and incident response—so your teams can scale generative AI with confidence. If you are asking how do artificial intelligence hallucinations occur in your environment, we can help you turn that question into a roadmap for safer, more valuable AI.